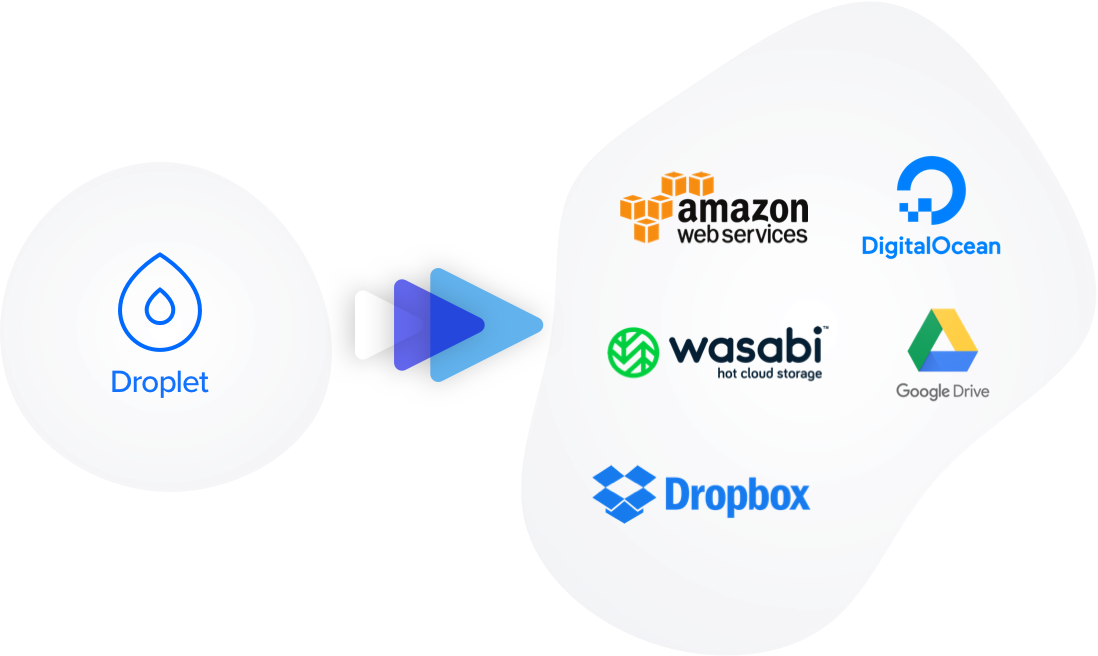

DigitalOcean + Wasabi

Wasabi 'Buckets' are additional storage that can be mounted within a virtual machine or connected to directly within Django and handy libraries like Django-Storages. Once mounted, a bucket looks the same as a directory (or folder). By all appearances it's a local folder, but in reality it's a virtual mirror of a folder that is located elsewhere and connected through the internet. With these sorts of connections (or mounts) you may notice a little bit of lag when opening and accessing folders since they are not local to the virtual machine. Whether or not this affects your use case is easy to test because Wasabi provides trial access to their services for 30 days free and 1TB of storage.

Directly mounting S3 storage with S3FS

The following subsection describes the steps that I followed to set up a Wasabi Bucket inside of a DigitalOcean Droplet with Ubuntu 18.04.

- SimpleBackups - DigitalOcean Server & Database Backups

- How to mount DigitalOcean Spaces on droplets with s3fs

- How to Mount S3/Wasabi/DigitalOcean Storage Bucket on CentOS and Ubuntu using S3FS

- How to Mount S3 Bucket on CentOS and Ubuntu using S3FS

- s3fs-fuse GitHub

- s3fs-fuse GitHub compilation instructions

- Allowing permissions using s3fs

- s3fs Documentation

Create wasabi access key with secret key

To generate a wasabi access key, login to your wasabi account and generate one under "Access Keys". Be sure to download the credentials.csv file as it has your key ID and secret key.

# Create access key file

echo [YOUR_WASABI_ACCESS_KEY_ID]:[YOUR_WASABI_SECRET_ACCESS_KEY] > ~/.pwd-s3fs

chmod 600 /etc/pwd-s3fsUninstall and reinstall s3fs

### Ubuntu Systems ###

sudo apt-get update

sudo apt-get remove fuse

### Ubuntu Systems ###

sudo apt-get install build-essential libcurl4-openssl-dev libxml2-dev mime-support

sudo apt install s3fs

Create directories and mount wasabi storage

- -0 nonempty: the current directory is not empty

- -0 allow_other: do not restrict access to mount to just root

sudo vim /etc/fuse.conf# uncomment the following:

user_allow_other# Make temporary cache directory and the desired mount

mkdir /tmp/cache /opt/mytardis/mydata_storage

# give these directories open read-write access

chmod 777 /tmp/cache /opt/mytardis/mydata_storage

# mount your wasabi storage bucket

# take note that "mytardis-mydata" is the name of my wasabi bucket

sudo s3fs mytardis-mydata /opt/mytardis/mydata_storage -o passwd_file=/etc/passwd-s3fs -o url=https://s3.wasabisys.com -o nonempty -o allow_other

## setup MyData directories

su tardis

# -p recursively makes directories

sudo mkdir -p /opt/mytardis/mydata_storage/receiving

sudo mkdir -p /opt/mytardis/mydata_storage/completeFstab

You might find yourself wondering how to ensure a mount is re-mounted after a reboot. Fstab is the Ubuntu configuration file to automate storage mounting.

- How to Mount S3/Wasabi/DigitalOcean Storage Bucket on CentOS and Ubuntu 20 using S3FS

- Introduction to fstab

Adding to fstab is as simple as vim:

sudo vim /etc/fstab# add to the bottom of the file

s3fs#mytardis-mydata /opt/mytardis/mydata_storage fuse allow_other,use_cache=/tmp/cache 0 0

:wq!S3 Storage + Django Settings

If you are setting up S3 storage with Django, then the setup is programmatic rather than through the command line and does not require Fstab or mounting. Django has a very useful python library called Django-Storages that simplifies direct connections to a variety of storage backends. The following steps are from Django with Wasabi S3 to utilize S3 storage as the primary location for Django static files. A more in depth description of Django can be found in Mytardis and You and MyTardis + MyData & PostgreSQL. MyTardis has already been integrated with Django-Storages, so the setup will also differ from what's described below.

- django-storages docs

- Django with Wasabi S3

- django-storages GitHub

- MyTardis S3 settings

- MyTardis storage backends docs

# install django-storages and boto3

pip install django-storages boto3

# enter settings.py in your Django project folder

sudo vim settings.py# enable in INSTALLED_APPS

INSTALLED_APPS = [

...

'storages',

]

# Add Wasabi S3 access settings to bottom of settings.py

AWS_ACCESS_KEY_ID = 'Wasabi-Access-Key'

AWS_SECRET_ACCESS_KEY = 'Wasabi-Secret-Key'

AWS_STORAGE_BUCKET_NAME = 'Wasabi-Bucket-Name'

AWS_S3_ENDPOINT_URL = 'https://s3.us-east-2.wasabisys.com'

AWS_S3_CUSTOM_DOMAIN = '%s.s3.us-east-2.wasabisys.com' % AWS_STORAGE_BUCKET_NAME

AWS_LOCATION = 'static

STATICFILES_DIRS = [

os.path.join(BASE_DIR, 'mytardis/static'),

]

STATIC_URL = 'https://%s/%s/' % (AWS_S3_CUSTOM_DOMAIN,AWS_LOCATION)

STATICFILES_STORAGE = 'storages.backends.s3boto3.S3Boto3Storage'

# save and quit

:wq!# enter your virtual environment

workon mytardis

# collectstatic files from within the upper-most Django project directory

python manage.py collectstaticCron and file syncronization with RClone

RClone works seamlessly with several backends, including Wasabi S3 storage. If you are coping data over to multiple locations, I highly recommend RClone. For more information, RClone and Moving Cloud Storage offers guidance on how to set RClone up. Another use case could be the desire to automate copying or synchronizing data between multiple locations. Cron is the Ubuntu configuration file to set up regularly scheduled tasks. For task queue management, see deployments like RabbitMQ+Celery.

- RClone

- RClone and Moving Cloud Storage

- Configure Rclone with Wasabi

- Cron

- RabbitMQ

- Celery

- Cron time string format

My regularly scheduled task with Cron triggered a bash script to send and receive data through RClone and call a Python script to parse and filter the incoming data. Part of the data that were being transferred included zipped archives, which when combined with S3 storage, causes incorrect file sizes and corrupt transfer error messages. To get around these issues, --ignore-checksum --ignore-size --s3-upload-cutoff 0 --no-gzip-encoding flags were added to the bash script:

# to create a bash script

sudo vim /home/tardis/rclone-cron.sh# https://forum.rclone.org/t/error-about-corrupted-transfer/11824/6

# added --ignore-checksum --ignore-size --s3-upload-cutoff 0 --no-gzip-encoding

# because .gz and s3 combined lead to incorrect file sizes and "corrupt transfer" error msgs

=== rclone-cron.sh

#!/bin/bash

if pidof -o %PPID -x “rclone-cron.sh”; then

exit 1

fi

/home/tardis/work/src/rclone/rclone copy wasabi:my-storage-bucket/CORE/ /CORE/ -P --update --min-age 15m --exclude-if-present "**/Thumbnail_Images/**" --exclude-if-present "**/Data/**" --log-file=/home/tardis/rclone-download.log --create-empty-src-dirs --ignore-case --ignore-checksum --ignore-size --s3-upload-cutoff 0 --no-gzip-encoding

source /home/tardis/.virtualenvs/mytd-env/bin/activate

python /home/tardis/mytardis-mydata-parser/run_server.py

exit

# save and exit

:wq!Before you are done, make sure the file has proper permissions. If these are not set, then the file will not be executed by Cron.

# make sure it can be executed by changing file permissions

sudo chmod a+x $HOME/rclone-cron.shTo a schedule the /home/tardis/rclone-cron.sh bash script to regularly run at midnight, I used 0 0 * * *. Cron time string format offers a great table that summarizes how to set up a Cron time string:

# to view cron jobs

sudo crontab -e# add to bottom

0 0 * * * su tardis -c "/home/tardis/rclone-cron.sh" >/dev/null 2>&1